Aligning AI to Human Values means Picking the Right Metrics

From time to time, the Partnership may curate research and ideas that might be of interest to our community, including from PAI Fellows or other voices. The views, information, and opinions expressed in this blog are solely those of the author and do not reflect the position of the Partnership on AI or its Partner organizations. This post discusses Facebook and Google, who are funding members of PAI.

Virtually every AI algorithm is designed around the idea of optimization: acting in a way that maximizes some metric. But optimizing for the wrong thing can cause a lot of harm. A social media product that optimizes for user engagement may end up figuring out how to addict us to clickbait and outrage, a scheduling system that maximizes efficiency may produce erratic schedules that interfere with workers’ lives, and algorithmic profit maximization can end up charging poorer people more for the same product. But there are also metrics that attempt to capture the deep human outcomes we care about, and some product teams are already trying to incorporate them.

The Partnership on AI’s “What Are You Optimizing For?” project aims to document the metrics that AI designers and operators are using today, to support the community dedicated to ensuring that we are using the right metrics, and ultimately to make human-centered metrics a standard part of AI practice. While AI alignment research is typically concerned with ensuring that future artificial general intelligence respects human values, there are alignment issues with today’s existing narrow AI systems too.

Metrics which attempt to capture real-world negative effects are already widely used in commercial AI engineering, though this practice is not particularly well documented or discussed. Companies have devised metrics to reduce clickbait, detect misinformation, ensure that new artists on a music platform get a fair shot at building an audience, detect addictive apps in an app store, and more.

These are all worthy interventions, but what should the broader goals of an AI system be? How can we evaluate whether any particular product is ultimately producing positive outcomes for people and society? In principle we could create metrics that capture important aspects of the effect of an AI system on human lives, just as cities and countries today record a large variety of statistical indicators. These metrics would be useful to the teams building and operating the system, to researchers who want to understand what the system is doing, and as a transparency and accountability tool.

This sort of metric-based management of broad social outcomes is already happening inside platform companies, mostly quietly. Facebook incorporated “well-being” metrics into their News Feed recommendation system in 2017, while YouTube began integrating “user satisfaction” and “social responsibility” metrics around 2015. This post documents these efforts, plus several more hypothetical ways that metrics could be used to steer AI in healthy directions. But first we need appropriate metrics.

Measuring Well-being

Well-being metrics attempt to measure a person’s subjective experience of life in a very general sense. They were originally developed in psychology in the late 20th century, but in the past few decades they have been developed into tools for governance and policy-making. The core question used in many surveys is “Overall, how satisfied are you with life as a whole these days?”

This is a surprisingly powerful question. Because it asks people to reflect on their life as a whole, the answers are not much affected by immediate moods or feelings. Experimentally, it seems to align with the way people make major life decisions, and works similarly across countries and cultures. But asking just this one question doesn’t give a very complete picture of someone’s life, which is why real well-being surveys like the OECD’s Better Life Index include many other questions about things like recent emotional experience, income, health, education, community involvement, and so on.

No metric can reveal the details of individual lives, and optimizing soley for survey responses is likely to fail in predictable ways. Nonetheless, there is important information here, which needs to be combined with other types of user research. It’s taken a long time to develop good well-being metrics, and AI product developers probably shouldn’t be in the business of creating their own. For this reason, there is now an IEEE standard which compiles indicators from a variety of sources for consideration by technical teams.

Facebook’s well-being changes

Facebook’s 2017 changes to the news feed ranking algorithm provide a detailed, well-documented example of how a large platform can incorporate well-being metrics into an optimizing system. It also raises some important questions about how large companies should use metrics. In late 2017, an unusual post appeared on an official Facebook blog:

What Do Academics Say? Is Social Media Good or Bad for Well-Being?

According to the research, it really comes down to how you use the technology. For example, on social media, you can passively scroll through posts, much like watching TV, or actively interact with friends — messaging and commenting on each other’s posts. Just like in person, interacting with people you care about can be beneficial, while simply watching others from the sidelines may make you feel worse.

This is in line with the conclusion that outside researchers had come to:

passively using social network sites provokes social comparisons and envy, which have negative downstream consequences for subjective well-being. In contrast, when active usage of social network sites predicts subjective well-being, it seems to do so by creating social capital and stimulating feelings of social connectedness.

Soon after, Facebook began to talk publicly about its efforts to encorate “meaningful social interactions.” A post by Zuckerberg suggests that this is a proxy for well-being:

The research shows that when we use social media to connect with people we care about, it can be good for our well-being … I’m changing the goal I give our product teams from focusing on helping you find relevant content to helping you have more meaningful social interactions.

This managerial goal turned into algorithmic changes, as described by the head of the News Feed product:

Today we use signals like how many people react to, comment on or share posts to determine how high they appear in News Feed. With this update, we will also prioritize posts that spark conversations and meaningful interactions between people. To do this, we will predict which posts you might want to interact with your friends about, and show these posts higher in feed (Mosseri 2018).

In other words, Facebook developed a probabilistic model to predict “meaningful interactions,” a proxy for well-being, and incorporated this into the News Feed ranking algorithm. What’s a “meaningful interaction”? The only concrete description comes from Facebook’s Q4 2017 earnings call, where Zuckerberg explains that this metric – the training data for the new predictive model – comes from user panel surveys:

So the thing that we’re going to be measuring is basically, the number of interactions that people have on the platform and off because of what they’re seeing that they report to us as meaningful.

… the way that we’ve done this for years is we’ve had a panel, a survey, of thousands of people who [we] basically asked, what’s the most meaningful content that they had seen on the platform or they have seen off the platform. And we design our systems in order to be able to get to that ground truth of what people, real people are telling us is that high-quality experience

This is the point where the people who might be most affected by product decisions were consulted. All of this work is on their behalf. It seems like there should also be consultation on the question of which metric to watch — there are technocratic and paternalistic traps here, no matter how good one’s intentions might be. But it’s not quite clear what it would mean to ask potentially billions of users which metric they would prefer.

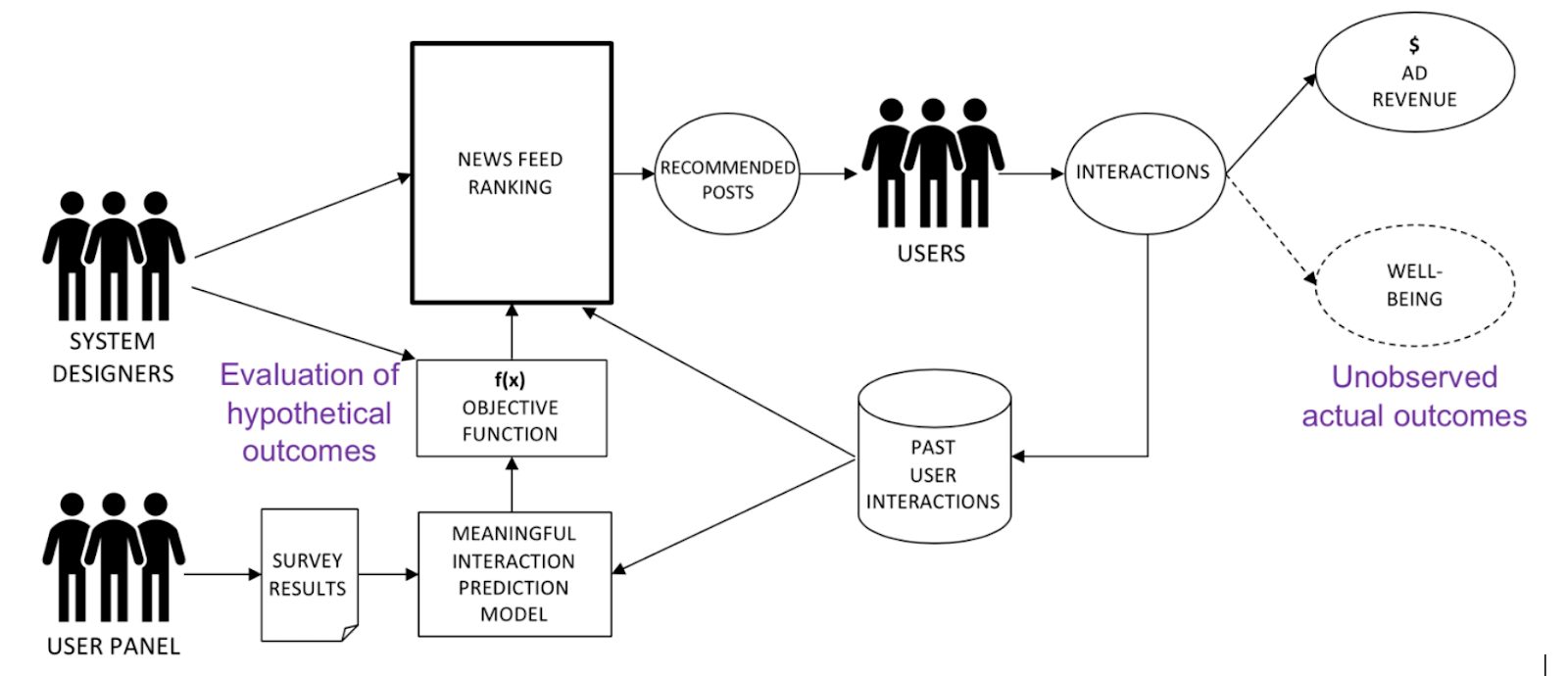

So while users were not consulted in choosing either the primary or the proxy metric, they were surveyed to produce the training data used to predict “meaningful interactions.” The overall system looks like this:

A reconstruction of Facebook’s 2018 “meaningful social interaction” changes

This “objective function” is a piece of code that defines how “good” a particular hypothetical output would be; it’s the thing that is optimized by an optimizing system, the machine translation of a human goal. Although there are many ways to change a product that might improve well-being — for example, features that limit “screen time” — modern AI systems are usually designed to optimize a small number of key metrics that are encoded into an objective function.

Facebook changed both the metric given to the News Feed team, and the objective function that ultimately drives the News Feed ranking algorithm. Unfortunately, there is no public account of the well-being effects of these changes. But in that same investor call there is a record of the business effects of a related “meaningful social interaction” change, this one concerning Facebook’s video product rather than the News Feed:

We estimate these updates decreased time spent on Facebook by roughly 5% in the fourth quarter. To put that another way: we made changes that reduced time spent on Facebook by an estimated 50 million hours every day to make sure that people’s time is well spent.

This is clearly a reduction in short term engagement, which shows that the objective did shift in a noticeable way, but it’s not clear what the longer term effects were. A genuinely better product might attract more customers.

The biggest weakness of this work is that there was no public evaluation of the results. Did the plan work? How well, and for who? This is especially important because the link between “meaningful interactions” and well-being is theoretical, deduced from previous research into active versus passive social media use.

The general approach

Regardless of the virtues of what Facebook did and didn’t do, trying to correct for the effects of a large optimizing system by changing the objective based on people’s feedback is a very general approach. Done well, the process might look like this:

- Select a well-being metric, perhaps from existing frameworks. This stage is where the involvement of the people affected by the optimization matters most.

- Define a proxy metric for which data can actually be collected, that is scoped to only those people affected by the system, and that can be estimated for hypothetical system outputs.

- Use this metric as a performance measure for the team building and operating the system.

- The team may choose to translate the metric into code, for example as modifications to the system’s objective function. They may also find extra-technical interventions that improve the metric.

- The chosen well-being metric is not fixed, but must be continuously re-evaluated to ensure it is appropriate to changing conditions and does not cause side effects of its own

Other companies have done similar things, and the general pattern could apply in many other contexts.

During the period of 2012-2016, YouTube was striving to reach one billion hours of daily user watch time. Yet this “time spent” metric was not absolute, as the company made decisions to suppress clickbait and ultimately added “user satisfaction” and “social responsibility” metrics to its algorithmic objective function. YouTube hasn’t said exactly what these metrics are or why they were used, so we don’t really know what they target or how effective they are. As in the Facebook case, a decision to penalize clickbait reduced short term engagement, but Measure What Matters by John Doerr documents that engagement recovered within a few months as “people sought out more satisfying content.”

There are many other places where the careful choice of metrics might have positive effects on people’s lives. Most “gig economy” workers are employed as independent contractors, and many experience week-to-week fluctuations in income as a major source of economic instability and anxiety. An appropriate measure of income stability could be used to smooth worker income by distributing fluctuations in demand between workers and perhaps across time.

Optimizing systems can also incorporate the perspectives of non-users. A ride sharing platform may cause traffic jams for non-riders, and environmental effects are potentially global. There is a whole field of “multi-stakeholder optimization” that studies algorithms designed to account for these types of issues. A product recommendation system is typically designed to present users with products they are most likely to buy, but could also incorporate climate change concerns through estimates of product carbon footprint. These could be used to increase the visibility of alternative low-carbon products, with the goal of minimizing the metric of carbon intensity per unit revenue.

A hypothetical product recommendation system that tries to reduce the carbon footprint of products sold.

In many other important cases it’s not as clear what the “right” metric might be. A content recommendation system filters the vast sea of available information for users. There has been extensive discussion of the need for “diverse” content recommendations, especially when filtering news content. However, “diverse” could mean different things and there is no consensus on which definition is best. There are a variety of perspectives starting from political principles, while the technical community has developed a variety of objective functions that are designed to be “diverse.” Choosing a metric is itself a hard problem — the intersection of what is right with what can be measured.

Picking Metrics

An attempt to optimize for well-being is an attempt to benefit a particular group of people, who need to have a say in what is done on their behalf. Whether a community of place or a community of users, this is the basic reason why community involvement is necessary. There are many ways to involve users in the creation of software, such as the field of participatory design. Yet collaboratively picking a metric is a unique challenge; it’s not obvious how a large platform would ask a billion users what to optimize for, or even how to frame the question.

Nor can the choice be static. Both the primary metric and its proxy need to be able to change and adapt. Any measure that becomes a target incentivizes various kinds of cheating and gaming behavior, so the useful lifespan of a metric may be limited. This also responds to the concern of AI researchers who warn that optimization of a single objective function can have disastrously negative side effects. In this context, there are always humans supervising and operating the AI system, and they are free to change the objective function as needed.

But more importantly, the world can change. Perhaps a hospital closure suddenly makes access to emergency health care a community priority. Or perhaps after careful management, income stability ceases to be the chief concern of gig economy workers. Like the idea that targeting a measure changes its meaning, there are many names for the idea that measures must continually change. “Double-loop learning” is the idea that an adaptive organization has two learning processes operating simultaneously: it learns how to progress towards its goals, while continually re-evaluating the desirability of those goals.

Narrow Alignment

A concern with metrics fits neatly into one of the deep unsolved problems of AI theory. As optimization becomes more powerful and agents become more autonomous, the specification of precisely the right goal becomes a more serious problem. Like the genie in the lamp, machines are apt to take us literally in disastrous ways. In Stuart Russell’s example, if I ask a robot to fetch me coffee I don’t mean “at all costs,” so it shouldn’t kill anyone while trying to achieve this goal. “Alignment,” or the creation of artificial agents which act according to human values, is a major unsolved problem for artificial general intelligence (AGI).

The builders of today’s “narrow” AI systems face a similar challenge of encoding the correct goals in machine form; first generation systems used obvious metrics like engagement and efficiency which ended up creating negative side effects. But this “narrow alignment” problem seems much easier to address than AGI alignment, because it’s possible to learn from how existing systems behave in the real world. Narrow alignment is worth working on for its own sake, and may also give us critical insight into the general alignment problem.

What are you optimizing for?

If you find the quantitative management of the subjective experience of the members of society troubling, you are not alone. There was widespread concern when Facebook published a paper on “emotional contagion,” for example. This sort of social engineering at scale has all the problems of large AI systems, plus all the problems of public policy interventions.

My argument is not so much that one should use AI to optimize for well-being. Rather, we live in a world where large-scale optimization is already happening. We can choose not to evaluate or adjust these systems, but there is little reason to imagine that ignorance and inaction would be better. Mistakes will be made if we try to optimize for human happiness by quantitative means, but then, doing nothing is also a mistake. Metrics, however flawed and incomplete, are a fundamental part of a positive future for AI.

If you are working on the problem of optimizing for the right thing, I’d love to hear from you at jonathan@partnershiponai.com.